Transreflective lcd doesn’t look great though, especially when viewed at angles, or when the room is bright enough to light the reflective layer but dark enough to require the backlight.

Transreflective lcd doesn’t look great though, especially when viewed at angles, or when the room is bright enough to light the reflective layer but dark enough to require the backlight.

Are you living in the same country as those that complain about ads in windows? From what I understand, this is not rolled out globally yet.

Microsoft always treated linux and foss with such disdain while under Bill Gates and Steve Ballmer. Their current CEO is an outlier, openly embracing and extending foss and linux. After years of abuses from Gates and Ballmer, many people in the linux community won’t be so quick to trust them.

Big corps love A/B testing, slow rollouts and geo-restricted features. You might be in a different group than people that get all these ads.

Just turn off “show scores” in your profile and you’ll be happier. It’s a meaningless number anyway.

Imagine donating your body to science and the scientists slice your brain and scan them, then decades later you suddenly wake up in a virtual space because the scientists are finally able to emulate a copy of your brain in a supercomputer.

Why would they do that when they can use this as yet another push to move people to windows 11.

The books they loaned are loaded with DRM though, which make it unusable after the loan period expires, so it’s not like they’re handing out unlocked pdf en-masse like z-library. They probably thought this restriction was good enough and publishers have enough goodwill to let it slide during the height of the pandemic.

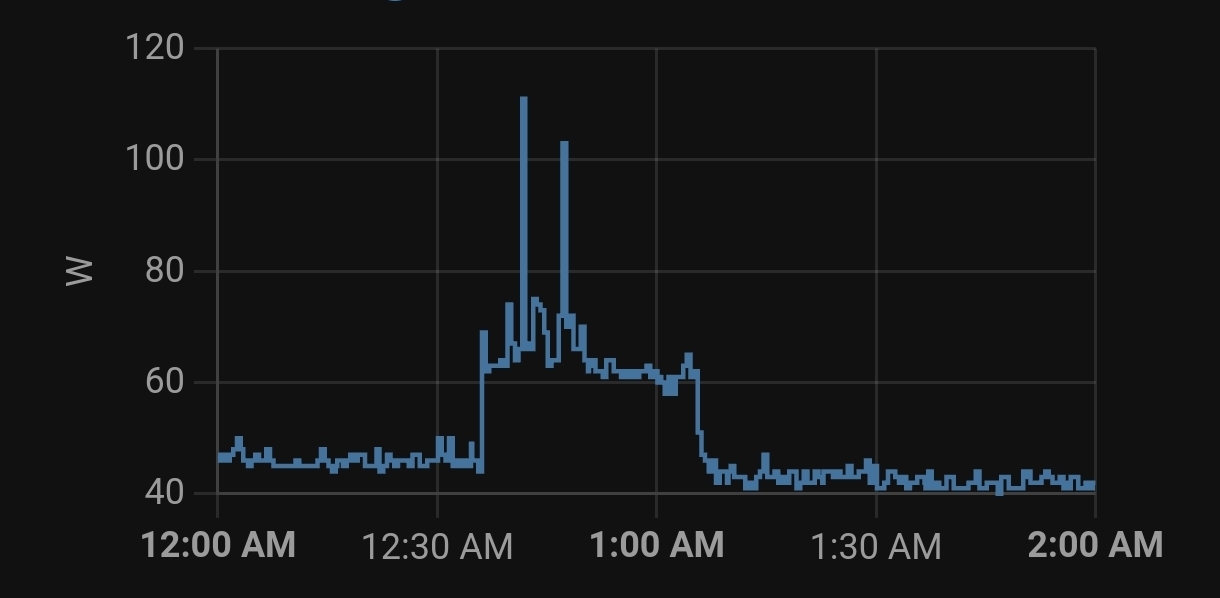

For comparison, I run a thinkstation p300 with i7-4790 (TDP 84W) 24/7 and the power usage looks like this:

Even when idling this old processor still guzzles 45W. Certainly not as nice as GP’s that only use 10W during idle.

Power scaling for these old CPU is not great though. Mine is slightly newer and on idle it still uses 50% of the TDP.

Xeon E5-2670, with 115W TDP, which means 2x115=230W for the processor alone. with 8 ram modules @ ~3W each, it’ll going to guzzle ~250W when under some loads, while screaming like a jet engine. Assuming $0.12/kwh, that’s $262.8 per year for electricity alone.

Would be great if you have an isolated server room to contain the noise and cheap electricity, but more modern workstation should use at least 1/4 of electricity or even less.

They’ve been playing cat and mouse with google for a while now where google keep breaking youtube access from newpipe.

Google Reader was the best. Not sure why Google killed it, but it was really good at both content discovery and keeping up with sites you’re interested in. I tried several alternatives but nothing came close, so I gave up and hung out more on forums / link aggregators like slashdot, hacker news, reddit and now lemmy for content discovery. I’m also interested to hear what others use.

Take that FreeDOS!

Marginalia is interesting because it attempt to search non-commercial contents. this might unearth some contents you can’t find on google. If you search something on google and the result is full of spam or ecommerce product pages, try the same keyword on marginalia. Unlike google, it’s a keyword search engine, so keep in mind not to ask question in it, but put the keyword that might be included in the content you want to search.

Kagi is a paid search engine. it does use data from other big search engines, but apply its own weighting and filtering and unearth contents normally buried on the big search engines. There is a free trial account if you want to test it yourself to see if it’s better than google for your use case.

There are also various searxng instances. searxng is an opensource meta search engines, which uses data from other search engine. Each instances may be configured differently, so you might want to test some of them to decide which instance works the best for your use case.

Some interesting comparison: https://danluu.com/seo-spam/

In the March 2019 core update to search, which happened about a week before the end of the code yellow, was expected to be “one of the largest updates to search in a very long time. Yet when it launched, many found that the update mostly rolled back changes, and traffic was increasing to sites that had previously been suppressed by Google Search’s “Penguin” update from 2012 that specifically targeted spammy search results, as well as those hit by an update from an August 1, 2018, a few months after Gomes became Head of Search.

Search engagement is declining, so the obvious fix is to make the search result worse which means people have to search more to find what they need. Engagement metrics went through the roof! Crisis averted!

Thanks to this fuck up, competition is a thing again in search engine space. Other search engines are getting better and start to capture the fleeing users.

Yes, but autossh will automatically try to reestablish connection when its down, which is perfect for servers behind cgnat that you can’t physically access. Basically setup and forget kind of app.

If this server is running Linux, you can use autossh to forward some ports in another server. In this example, they only use it to forward ssh port, but it can be used to forward any port you want: https://www.jeffgeerling.com/blog/2022/ssh-and-http-raspberry-pi-behind-cg-nat

By “remotely accessible”, do you mean remotely accessible to everyone or just you? If it’s just you, then you don’t need to setup a reverse proxy. You can use your router as a vpn gateway (assuming you have a static ip address) or you can use tailscale or zerotier.

If you want to make your services remotely accessible to everyone without using a vpn, then you’ll need to expose them to the world somehow. How to do that depends on whether you have a static ip address, or behind a CGNAT. If you have a static ip, you can route port 80 and 443 to your load balancer (e.g. nginx proxy manager), which works best if you have your own domain name so you can map each service to their own subdomain in the load balancer. If you’re behind a GCNAT, you’re going to need an external server/vps to route traffics to its port 80 and 443 into your home network, essentially granting you a static ip address.

You can buy the xreal glass separately for $449: https://us.shop.xreal.com/products/xreal-air-2-pro