- 5 Posts

- 4 Comments

Joined 6 months ago

Cake day: March 17th, 2025

You are not logged in. If you use a Fediverse account that is able to follow users, you can follow this user.

32·1 month ago

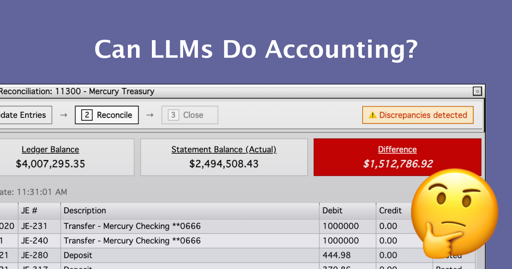

32·1 month agoState-of-the-art LLM agents do not perform calculations, they call external tools to do that.

2·1 month ago

2·1 month agoTo be fair, not all knowledge of LLM comes from training material. The other way is to provide context to instructions.

I can imagine someone someday develops a decent way for LLMs to write down their mistakes in database and some clever way to recall most relevant memories when needed.

10·4 months ago

10·4 months agoIt is literally an algorytm made to hallucinate. The fact that it outputs accurate facts is more of a side effect.

Can I use it? And if not: when can I use it?